agent

agent作为langchain框架中驱动决策制定的实体。它可以访问一组工具,并可以根据用户的输入决定调用哪个工具。正确地使用agent,可以让它变得非常强大。他主要有3个组成部分:

- Tool:执行特定职责的功能。

- LLM:为代理提供动力的语言模型。

- Agent:要使用的agent

示例代码

from langchain.agents import initialize_agent,Tool

from langchain.tools import BaseTool

import os

from langchain import LLMMathChain, SerpAPIWrapper

from langchain.llms import OpenAI

from dotenv import load_dotenv

load_dotenv()

os.environ["OPENAI_API_KEY"] = os.getenv("OPENAI_API_KEY")

os.environ["OPENAI_API_BASE"] = os.getenv("OPENAI_API_BASE")

os.environ["SERPAPI_API_KEY"] = os.getenv("SERPAPI_API_KEY")

print(os.environ["OPENAI_API_BASE"])

llm = OpenAI(temperature=0)

search = SerpAPIWrapper()

tools = [

Tool(

name = "Search",

func=search.run,

description="useful for when you need to answer questions about current events"

),

Tool(

name="Music Search",

func=lambda x: "'All I Want For Christmas Is You' by Mariah Carey.", #Mock Function

description="A Music search engine. Use this more than the normal search if the question is about Music, like 'who is the singer of yesterday?' or 'what is the most popular song in 2022?'",

)

]

agent = initialize_agent(

tools, llm, agent="zero-shot-react-description", verbose=True,)

print(agent.agent.llm_chain.prompt.template)

agent("what is the most famous song of christmas?")

返回结果:

Answer the following questions as best you can. You have access to the following tools:

Search: useful for when you need to answer questions about current events

Music Search: A Music search engine. Use this more than the normal search if the question is about Music, like 'who is the singer of yesterday?' or 'what is the most popular song in 2022?'

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [Search, Music Search]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: {input}

Thought:{agent_scratchpad}

> Entering new AgentExecutor chain...

I should search for a popular christmas song

Action: Music Search

Action Input: most famous christmas song'All I Want For Christmas Is You' by Mariah Carey. I now know the final answer

Final Answer: 'All I Want For Christmas Is You' by Mariah Carey.

> Finished chain.

原理

主体逻辑

主要是调用AgentExecutor的_call方法,代码如下:

def _call(self, inputs: Dict[str, str]) -> Dict[str, Any]:

"""Run text through and get agent response."""

# Construct a mapping of tool name to tool for easy lookup

name_to_tool_map = {tool.name: tool for tool in self.tools}

# We construct a mapping from each tool to a color, used for logging.

color_mapping = get_color_mapping(

[tool.name for tool in self.tools], excluded_colors=["green"]

)

intermediate_steps: List[Tuple[AgentAction, str]] = []

# Let's start tracking the number of iterations and time elapsed

iterations = 0

time_elapsed = 0.0

start_time = time.time()

# We now enter the agent loop (until it returns something).

while self._should_continue(iterations, time_elapsed):

next_step_output = self._take_next_step(

name_to_tool_map, color_mapping, inputs, intermediate_steps

)

if isinstance(next_step_output, AgentFinish):

return self._return(next_step_output, intermediate_steps)

intermediate_steps.extend(next_step_output)

if len(next_step_output) == 1:

next_step_action = next_step_output[0]

# See if tool should return directly

tool_return = self._get_tool_return(next_step_action)

if tool_return is not None:

return self._return(tool_return, intermediate_steps)

iterations += 1

time_elapsed = time.time() - start_time

output = self.agent.return_stopped_response(

self.early_stopping_method, intermediate_steps, **inputs

)

return self._return(output, intermediate_steps)

主要是while循环体中的逻辑,主体逻辑如下:

- 调用

_take_next_step方法 - 判断返回的结果是否可以结束

- 如果可结束就直接返回结果,否则继续步骤1-2

_take_next_step方法

在thought-action-observation循环中采取单一步骤。重写此方法以控制Agent如何做出选择和行动。

def _take_next_step(

self,

name_to_tool_map: Dict[str, BaseTool],

color_mapping: Dict[str, str],

inputs: Dict[str, str],

intermediate_steps: List[Tuple[AgentAction, str]],

) -> Union[AgentFinish, List[Tuple[AgentAction, str]]]:

"""Take a single step in the thought-action-observation loop.

Override this to take control of how the agent makes and acts on choices.

"""

# Call the LLM to see what to do.

output = self.agent.plan(intermediate_steps, **inputs)

# If the tool chosen is the finishing tool, then we end and return.

if isinstance(output, AgentFinish):

return output

actions: List[AgentAction]

if isinstance(output, AgentAction):

actions = [output]

else:

actions = output

result = []

for agent_action in actions:

self.callback_manager.on_agent_action(

agent_action, verbose=self.verbose, color="green"

)

# Otherwise we lookup the tool

if agent_action.tool in name_to_tool_map:

tool = name_to_tool_map[agent_action.tool]

return_direct = tool.return_direct

color = color_mapping[agent_action.tool]

tool_run_kwargs = self.agent.tool_run_logging_kwargs()

if return_direct:

tool_run_kwargs["llm_prefix"] = ""

# We then call the tool on the tool input to get an observation

observation = tool.run(

agent_action.tool_input,

verbose=self.verbose,

color=color,

**tool_run_kwargs,

)

else:

tool_run_kwargs = self.agent.tool_run_logging_kwargs()

observation = InvalidTool().run(

agent_action.tool,

verbose=self.verbose,

color=None,

**tool_run_kwargs,

)

result.append((agent_action, observation))

return result

- 调用LLM决定下一步需要做什么

- 如果返回结果是

AgentFinish就直接返回 - 如果返回结果是

AgentAction就根据action调用配置的tool - 然后调用LLM返回的

AgentAction和调用tool返回的结果(observation)一起加入到结果中

那LLM是怎么判断返回的结果是AgentFinish还是AgentAction呢?

继续跟进plan方法

plan方法

def plan(

self, intermediate_steps: List[Tuple[AgentAction, str]], **kwargs: Any

) -> Union[AgentAction, AgentFinish]:

"""Given input, decided what to do.

Args:

intermediate_steps: Steps the LLM has taken to date,

along with observations

**kwargs: User inputs.

Returns:

Action specifying what tool to use.

"""

full_inputs = self.get_full_inputs(intermediate_steps, **kwargs)

full_output = self.llm_chain.predict(**full_inputs)

return self.output_parser.parse(full_output)

- 构建输入参数

- 调用LLM(openai)获取输出结果

- 解析结果,在这里就是根据返回结果判断是

AgentFinish还是AgentAction

上述3个步骤分析如下

构建输入参数

def get_full_inputs(

self, intermediate_steps: List[Tuple[AgentAction, str]], **kwargs: Any

) -> Dict[str, Any]:

"""Create the full inputs for the LLMChain from intermediate steps."""

thoughts = self._construct_scratchpad(intermediate_steps)

new_inputs = {"agent_scratchpad": thoughts, "stop": self._stop}

full_inputs = {**kwargs, **new_inputs}

return full_inputs

其中使用的prompt template如下:

Answer the following questions as best you can. You have access to the following tools:

Search: 如果我想知道天气,'鸡你太美'这两个问题时,请使用它

Calculator: 如果是关于数学计算的问题,请使用它

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [Search, Calculator]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: {input}

Thought:{agent_scratchpad}

其实主要就是构建agent_scratchpad参数,具体的步骤如下:

def _construct_scratchpad(

self, intermediate_steps: List[Tuple[AgentAction, str]]

) -> Union[str, List[BaseMessage]]:

"""Construct the scratchpad that lets the agent continue its thought process."""

thoughts = ""

for action, observation in intermediate_steps:

thoughts += action.log

thoughts += f"n{self.observation_prefix}{observation}n{self.llm_prefix}"

return thoughts

- action.log:调用LLM返回的action结果

- observation_prefix:一般就是:”Observation: “

- observation:调用tools返回的结果

- llm_prefix:一般就是:”Thought:”

比如

- action.log:

I should search for a popular christmas songnAction: Music SearchnAction Input: most famous christmas song - observation:

All I Want For Christmas Is You' by Mariah Carey.

最终拼接的结果如下:

I should search for a popular christmas song

Action: Music Search

Action Input: most famous christmas song

Observation: 'All I Want For Christmas Is You' by Mariah Carey.

Thought:

调用LLM

主要和openai交互

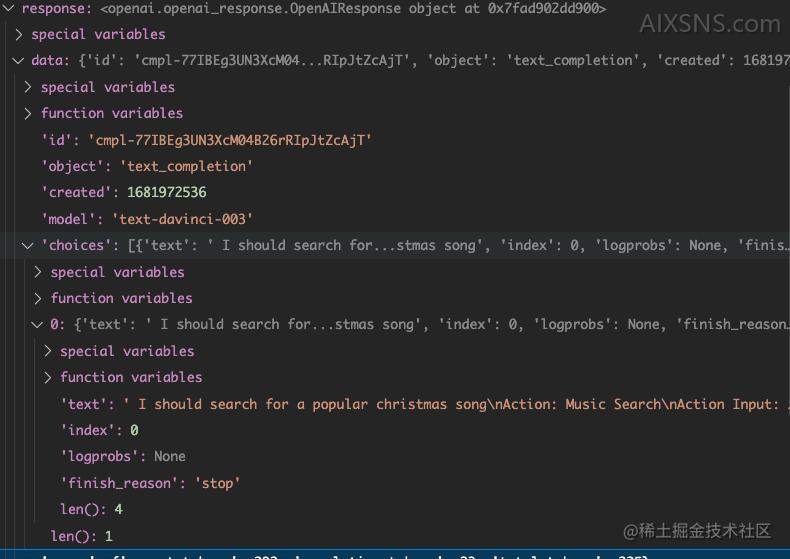

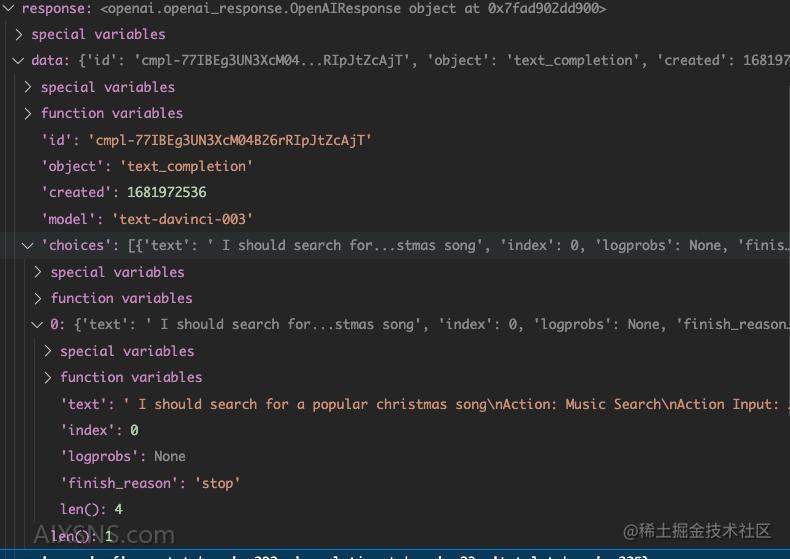

第一次

- Prompt如下:

"Answer the following questions as best you can. You have access to the following tools:

Search: useful for when you need to answer questions about current events

Music Search: A Music search engine. Use this more than the normal search if the question is about Music, like 'who is the singer of yesterday?' or 'what is the most popular song in 2022?'

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [Search, Music Search]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: what is the most famous song of christmas?

Thought:"

- 返回的结果如下:

文本如下:’ I should search for a popular christmas songnAction: Music SearchnAction Input: most famous christmas song’

第二次

- 生成的Prompt如下

"Answer the following questions as best you can. You have access to the following tools:

Search: useful for when you need to answer questions about current events

Music Search: A Music search engine. Use this more than the normal search if the question is about Music, like 'who is the singer of yesterday?' or 'what is the most popular song in 2022?'

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [Search, Music Search]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: what is the most famous song of christmas?

Thought: I should search for a popular christmas song

Action: Music Search

Action Input: most famous christmas song

Observation: 'All I Want For Christmas Is You' by Mariah Carey.

Thought:"

- 返回如下:

” I now know the final answernFinal Answer: ‘All I Want For Christmas Is You’ by Mariah Carey.”

解释结果

默认使用的MRKLOutputParser

class MRKLOutputParser(AgentOutputParser):

def get_format_instructions(self) -> str:

return FORMAT_INSTRUCTIONS

def parse(self, text: str) -> Union[AgentAction, AgentFinish]:

if FINAL_ANSWER_ACTION in text:

return AgentFinish(

{"output": text.split(FINAL_ANSWER_ACTION)[-1].strip()}, text

)

# s matches against tab/newline/whitespace

regex = r"Actions*d*s*:(.*?)nActions*d*s*Inputs*d*s*:[s]*(.*)"

match = re.search(regex, text, re.DOTALL)

if not match:

raise OutputParserException(f"Could not parse LLM output: `{text}`")

action = match.group(1).strip()

action_input = match.group(2)

return AgentAction(action, action_input.strip(" ").strip('"'), text)

- 简单的字符串匹配,区分是AgentFinish还是AgentAction

比如第一次返回的结果:I should search for a popular christmas songnAction: Music SearchnAction Input: most famous christmas song

解释后生成AgentAction

action:'Music Search'

action_input:'most famous christmas song'

第二次返回的结果:I now know the final answernFinal Answer: 'All I Want For Christmas Is You' by Mariah Carey.

解释后生成AgentFinish

log: 上面的原文

return_values.output:"'All I Want For Christmas Is You' by Mariah Carey."

总结

通过分析一个最简单的langchain的Agent,大概了解其实现原理。其中还是涉及到Prompt的设计,通过设计的Prompt可以让LLM一步步分析和拆解任务,然后调用预制的tool来完成任务。如果设计比较精美的prompt就可以让LLM自动完成一些比较复杂的任务,这也是AutoGPT和BabyAGI等做的事情吧。正好在langchain的官方文档中也发现使用langchain来实现AutoGPT和BabyAGI的例子。可以参考# Autonomous Agents

参考

LangChain-Chinese-Getting-Started-Guide

中文教程